On 20th of October, at the monthly community event of Autoexec.gr, I had the pleasure as a member to speak about Storage Spaces Direct, Microsoft’s vision and proposal for Software Defined Storage. It sounds like a new product, isn’t? Well, it’s not! Its actually a new feature that came along with the release of Windows Server 2016!

So what is Storage Spaces Direct ?

- Software-defined , shared nothing storage. It doesn’t use proprietary or any kind of special hardware. The servers are using local commodity disks and not shared trays or enclosures. Think about it as a Failover Cluster using Nodes with local storage were this storage can be pooled together and used by everyone in the Cluster.

- Is not a new product, it’s a feature.

- Highly available and scalable

- Storage for Hyper-V, Scale out File Server and SQL

Why Storage Spaces Direct?

- Servers with local industry standard commodity storage.

- Lower cost flash with SATA SSDs

- Better flash performance with NVMe SSDs

- Ethernet/RDMA network as storage fabric

Available with Windows Server 2016 Datacenter Edition and with all the available installation options, the new Nano Server, Server Core and Desktop Experience ( Full ).

Under the hood

File System (CSVFS with ReFS or NTFS)

- Cluster-wide data access

- Fast VHDX creation (ReFS), expansion and checkpoints

- Data Deduplication (NTFS)

Storage Spaces

- Scalable pool with all disk devices

- Resilient virtual disk

- Built-in always on Cache

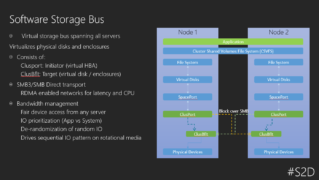

Software Storage Bus

- Storage Bus Cache

- Leverages SMB3 as storage fabric ( and all cool new features of SMB3, such as SMB Direct, SMB Multichannel )

- Storage QoS

Servers with local disks

- SATA, SAS and NVMe

Hardware Configurations

- Single-Tier Physical Storage, means we can only have one type of physical storage ( SATA HDD, SSD or NvME )

- Two-Tier, means we can have two types of types of physical storage ( SATA HDD + SSD or SATA HDD + NvME or SATA SSD + NvME )

- Three-Tier, means we can blend all types of supported storage.

So what is the meaning of blending different types of physical storage? It’s the always on cache mechanism of course! When new data are coming in, the storage spaces direct are keeping them in the cache tier ( always the fastest medium ) and after these data gets cold ( I/O cools down ), are destaged automatically to the capacity tier. This mechanism of Tiering occurs real-time and not in a schedule!

Deployment Scenarios

We have 3 of them.

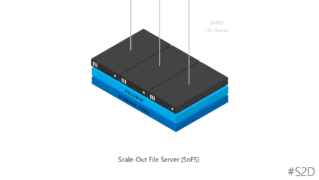

- Scale out File Server ( disaggregated )

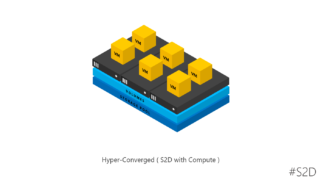

- Hyperconverged ( Hyper-V )

- SQL

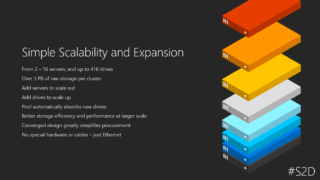

And if we need to scale? We can easily scale up or out by adding additional disks to the cluster or by adding additional cluster nodes.

The general configuration options are :

- 2 – 16 Nodes per cluster

- up to 416 disks

Cluster Specific configuration options:

- for a 2-3 node cluster, we can have two way or three way mirrored volumes.

- for a 4-16 node cluster, we can have Parity and Hybrid Volumes ( Erasure Coding, LRC ).

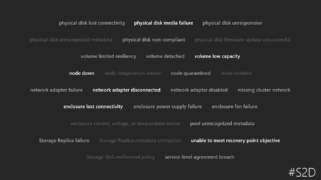

Resiliency

We have the following 4 types of resiliency in a Storage Spaces Direct Cluster.

- Volume

- Enclosure

- Chassis

- Rack

How can we manipulate all of these? Can S2D sense that a blade server sits on a particular enclosure? Of course not, that’s why we have the Fault Domains. By definition, it’s a set of hardware components that share a single point of failure. For fault tolerance you need multiple fault domains. It is also a way of logical tagging / grouping ( Rack, Enclosure,Chassis Location ) and configurable only by Powershell ( at least until now ).

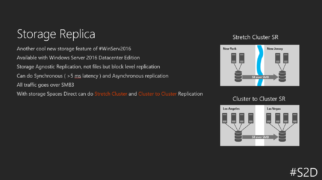

Storage Replica also supported! Another cool new feature of Windows Server 2016 ( Datacenter Edition only ) that can do block storage replication. In our situation it can work with the following scenarios:

- Stretch Cluster

- Cluster to Cluster

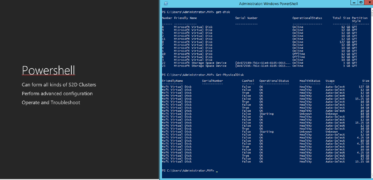

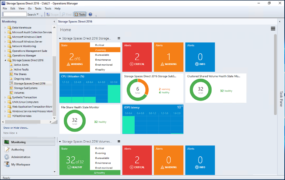

Manageability

Two methods are currenlty supported.

- Powershell

- System Center Virtual Machine Manager 2016

Great real-time insights with Systems Center Operations Manager 2016 and the new health service of S2D.

At the end of the presentation, we had a hands on deployment of a S2D Cluster. More specific we demonstrayed the process of creating a two node, S2D Hyper-Converged Cluster using System Center Virtual Machine Manager 2016, how to manage the resulted pool and create additional cluster shared disks. And all of these, in less than 20 minutes!

You must be logged in to post a comment.